Deploying on Azure Kubernetes Service (AKS)

Prerequisites

Create a Linux machine or use an existing one to run the commands for ABB Ability™ History AKS deployment and install the below Pre-requisites in the Linux machine before deployment.

Installation of prerequisites may be automated in future

- AZ CLI - For interacting with Azure

- JQ - The ‘jq’ command for parsing data JSON data in Linux

- IPSEC - https://www.linode.com/docs/guides/strongswan-vpn-server-install/

- helm - The package manager for Kubernetes

- Kubectl - Kubectl is a command line tool used to run commands against Kubernetes clusters - https://kubernetes.io/docs/tasks/tools/install-kubectl-linux/

- Set Bastion extension for Azure CLI - https://learn.microsoft.com/en-us/cli/azure/network/bastion?view=azure-cli-latest

- unzip - To unzip the compressed files

- sudo snap install libxml2 # version 2.9.9+pkg-0333 - to parse the xml files

- sudo apt install libxml2-utils # version 2.9.13+dfsg-1ubuntu0.3 - parse the xml files

- sudo apt install ansible - Ansible is the simplest way to automate apps and IT infrastructure. Application Deployment + Configuration Management + Continuous Delivery. https://www.ansible.com/

- sudo apt install ansible-core

ABB Ability™ History AKS Deployment Files

Download the following zip file which has all the required scripts for the ABB Ability™ History AKS deployment in the above Linux machine to your required directory ex: /home/work and extract the zip file

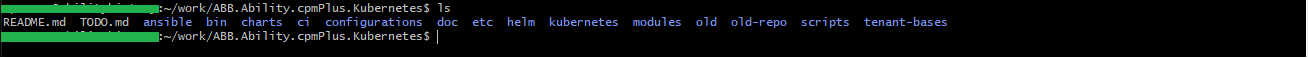

Now change the directory to above extracted folder and make sure that you can see the below files in the ABB.Ability.cpmPlus.Kubernetes directory.

Brief Overview Of Deployment

Deploying an Azure Kubernetes cluster for the purpose of running ABB Ability™ History involves several steps, namely:

- Creating a resource group and provisioning it with a virtual network and the subnet where the the Kubernetes cluster will be deployed.

- Also provisioning a VPN GW server with its corresponding public IP where the VPN clients will connect to and another subnet where the VPN clients will appear inside the virtual network.

- Commissioning the actual Kubernetes cluster.

- Provisioning the Kubernetes cluster which includes an update to the internal configuration of the cluster worker nodes and the deployment of a few Kubernetes resources (ConfigMaps, Secrets..) required to run and configure the ABB Ability™ History deployment.

- Deploying ABB Ability™ History on the Kubernetes cluster using a Helm chart.

Before we got into the above matter it is useful to describe the rationale of the solution architecture.

Architectural Considerations Of The Solution

The reusable building block of this solution is the Resource Group. It is up to the engineer to decide whether one or more customers will share the same Kubernetes cluster but the single indivisible block

that realizes the solution is the Resource Group.

Resource Groups can be decommissioned easily thus allocated resources (and its related costs) can be freed quickly.

Create A SSH Key In The Linux Machine

Create an ssh key in the Linux machine with a valid name and use this in the later steps of the deployment example: $HOME/.ssh/navaratri.pub

ssh-keygen

Create VPN Certificates

There are different ways to create a root certificate and its clientkey, the below mentioned steps are one of such method.

The user can use their existing root cert and client key or create a certificate which is used to connect to VPN

-

Run the below shell script to create vpn certificates which are used to connect to the Azure VPN and use the resources (Example :AKS Node pool.)

-

ROOT_CERT_NAME="P2SRootCert" USERNAME="client" PASSWORD="" mkdir -p certs cd certs sudo ipsec pki --gen --outform pem > rootKey.pem sudo ipsec pki --self --in rootKey.pem --dn "CN=$ROOT_CERT_NAME" --ca --outform pem > rootCert.pem #ROOT_CERTIFICATE=$(openssl x509 -in rootCert.pem -outform der | base64 -w0 ; echo) sudo ipsec pki --gen --size 4096 --outform pem > "clientKey.pem" sudo ipsec pki --pub --in "clientKey.pem" | \ sudo ipsec pki \ --issue \ --cacert rootCert.pem \ --cakey rootKey.pem \ --dn "CN=$USERNAME" \ --san $USERNAME \ --flag clientAuth \ --outform pem > "clientCert.pem" openssl pkcs12 -in "clientCert.pem" -inkey "clientKey.pem" -certfile rootCert.pem -export -out "client.p12" -password "pass:$PASSWORD" -

After the successful execution of the script, make sure that certs folder is created with certificates as show in the below screenshot.

Resource Group and Network Provisioning

Azure Resource Group and the required network provisioning are done using the bin/network-setup script. Login to Azure with command az login.

Set the subscription which you have access to with the command

az account set --subscription 'subscriptionid'

Once the azure login and azure subscription is successfully set You can run the network-setup script after creating a custom config file under the folder etc/ from the extracted build path. (don't use the default /etc path)

See etc/network-setup-config.sample for an example of a configuration file. Customize it to your desired settings.

Make sure to pass the full path of the root certificate created in earlier steps against the parameter ROOT_CERT_FILENAME, resource group name against parameter RG and the location where you want to place this resource group against the parameter AZURE_LOCATION in the config file you are creating based on etc/network-setup-config.sample.

bin/network-setup --config-file etc/your-config-file

Note: This will take quite some time to complete the task.

Cluster Commissioning

Cluster commissioning is the easiest part of the whole process. The only options you need to parametrize is the SSH key that will be used to log in the worker nodes using the SSH. All the other option use the values generated from the network setup stage above.

Cluster names are of the form aah-k8s-NN.

SUBNET_ID=`az network vnet subnet show -g "$RG" --vnet-name aah-k8s-vnet --name cluster-subnet | jq -r '.id'`

echo $SUBNET_ID

SSH_PUBKEY_FILE=$HOME/.ssh/navaratri.pub

#Create AKS cluster

az aks create --name aah-k8s-01 \

--resource-group aah-k8s-demo --location "$AZURE_LOCATION" \

--ssh-key-value "$SSH_PUBKEY_FILE" \

--network-plugin azure --nodepool-name nodepool01 \

--vnet-subnet-id "$SUBNET_ID" \

--node-count=2

--node-vm-size=Standard_D2s_v3

Note: The parameter

--node-vm-sizevalue can be decided based on system sizing requirement

Cluster Provisioning

Cluster provisioning is the step of configuring kernel parameters in the worker nodes and installing a few Kubernetes resources (ConfigMaps, Secrets,..) required by Ability™ History to run.

Connecting To The VPN

Connecting to the resource group VPN allows you to access resources with private IP addresses in the Resource Group ex: Node Pools, etc.

Use the below shell script to establish VPN connection, you could make the below shell script as a file and execute.

There are different ways to connect to the VPN in the Azure resource group. The user is free to use any one of the method of their choice. The below explained steps are one of such methods.

#echo "Configure VPN client"

VPN_CFG_URL=`az network vnet-gateway vpn-client show-url \

--resource-group "$RG" --name vnet-gw-vpn-server`

U=`echo "$VPN_CFG_URL" | tr -d '"'`

echo $U

VPN_CFG_BASENAME=vpnclientcfg

curl "$U" --silent -o "$VPN_CFG_BASENAME.zip"

unzip -x vpnclientcfg.zip -d "$VPN_CFG_BASENAME"

INSTALL_DIR="/etc/"

VIRTUAL_NETWORK_NAME="aahk8svnet"

VPN_SERVER=$(xmllint --xpath "string(/VpnProfile/VpnServer)" ${VPN_CFG_BASENAME}/Generic/VpnSettings.xml)

VPN_TYPE=$(xmllint --xpath "string(/VpnProfile/VpnType)" ${VPN_CFG_BASENAME}/Generic/VpnSettings.xml | tr '[:upper:]' '[:lower:]')

ROUTES=$(xmllint --xpath "string(/VpnProfile/Routes)" ${VPN_CFG_BASENAME}/Generic/VpnSettings.xml)

sudo cp "${INSTALL_DIR}ipsec.conf" "${INSTALL_DIR}ipsec.conf.backup"

sudo cp "${VPN_CFG_BASENAME}/Generic/VpnServerRoot.cer_0" "${INSTALL_DIR}ipsec.d/cacerts"

sudo cp "certs/client.p12" "${INSTALL_DIR}ipsec.d/private"

sudo tee -a "${INSTALL_DIR}ipsec.conf" <<EOF

conn $VIRTUAL_NETWORK_NAME

keyexchange=ikev2

type=tunnel

leftfirewall=yes

left=%any

leftauth=eap-tls

leftid=%client

right=$VPN_SERVER

rightid=%$VPN_SERVER

rightsubnet=$ROUTES

leftsourceip=%config

auto=add

esp=aes256gcm16,aes128gcm16!

EOF

echo ": P12 client.p12 ''" | sudo tee -a "${INSTALL_DIR}ipsec.secrets" > /dev/null

sudo ipsec restart

sudo ipsec up $VIRTUAL_NETWORK_NAME

echo "Successfully configred the VPN client"

Kernel Customization

Kernel customization is done using Ansible. The purpose of this action is to set the kernel parameter vm.max_map_countto a value useful for purpose of smooth running of the Ability™ History pods.

The ansible play book needs to connect to the node pools using the node IP. The IP can be collected using the below mentioned commands.

# Get the cluster credentials

CLUSTER_NAME=aah-k8s-01

LOCATION=norwayeast

RG=aah-k8s-demo

KUBECFG="$HOME/.kube/$LOCATION-$CLUSTER_NAME.config"

az aks get-credentials -n "$CLUSTER_NAME" -g "$RG"

az aks get-credentials -n "$CLUSTER_NAME" -g "$RG" --file "$KUBECFG"

# Get the node IP addresses:

kubectl get node --kubeconfig="$KUBECFG" --output=wide

Update the IP address to the inventory.yaml file in the below path

ansible/inventories/inventory.yaml (make sure you update one entry for each node its displayed in above command)

Run the Ansible playbook to reconfigure the kernel

Make sure that the client machine is connected to the VPN configured in above steps

ansible-playbook --inventory ansible/inventories/inventory.yaml

ansible/playbooks/vm-max-map-count.yaml

Kubernetes Resources

The StorageClass

Ensure the Storage Class managed-premium-retain exists in the cluster.

kubectl get storageclass

kubectl get storageclass/managed-premium-retain

Expect one with name managed-premium-retain. If it does not exist then create it with:

kubectl create -f kubernetes/resources/ABB-Ability-History-sc.yaml

If the command was successful the new StorageClass should be visible now:

kubectl get storageclass/managed-premium-retain > /dev/null

[[ $? -eq 0 ]] && {

echo "The required StorageClass exists."

} || {

echo "The required StorageClass does not exist."

}

The Namespace

Ensure the Namespace where you are going to deploy exists in the cluster. It is recommended that you store the namespace in an environment variable because it add flexibility when managing several namespaces.

export NS=client1

kubectl get namespace/$NS

# If not then create then namespace with:

kubectl create namespace "$NS"

Secrets - Image Registry credentials and Application passwords

Ensure that the credentials for retrieving images from the Container Image Registry (cpmplus.azurecr.io) exist in a Kubernetes Secret. It must be called (for the time being) cpmplus-azurecr-creds.

Raise a Jira ticket to get the credentials for the container registry (cpmplus.azurecr.io)

The secret must exist in the namespace where you will deploy ABB Ability History™.

kubectl get secrets --namespace "$NS"

kubectl get secrets/cpmplus-azurecr-creds --namespace "$NS"

If it does not exist then create it. It's recommended to have a file with the username and password separated with a colon (likeusername:password) which will be called cpmplus.auth. Proceeding assumption that it exists now:

AUTH_FILE=etc/cpmplus.auth

REGISTRY=cpmplus.azurecr.io

CRI_USERNAME="$(cat $AUTH_FILE | cut -d: -f1)"

CRI_PASSWORD="$(cat $AUTH_FILE | cut -d: -f2)"

# You create the secret with:

kubectl create secret docker-registry cpmplus-azurecr-creds \

--namespace="$NS" --docker-server="$REGISTRY" \

--docker-username="$CRI_USERNAME" --docker-password="$CRI_PASSWORD"

# Check that is exists:

kubectl get secrets/cpmplus-azurecr-creds --namespace $NS

The application root password & application robot password is set in corresponding Secret. Choose a strong password so that you will get peace of mind when exposing the application to the public internet:

# Create secret for aah-root-passwd

ROOTPASSWORD=<provide a strong password>

kubectl create secret generic aah-root-passwd --namespace "$NS" \

--from-literal=ROOTPASSWORD="$ROOTPASSWORD"

# Create secret for aah-robot-passwd

ROBOTPASSWORD=ROOTPASSWORD=<provide a strong password>

kubectl create secret generic aah-robot-passwd --namespace "$NS" \

--from-literal=ROBOTPASSWORD="$ROBOTPASSWORD"

It is important that the secret name is precisely aah-root-passwd & aah-robot-passwd because it is the name expected by the StatefulSet that manages the Ability History™ deployment to hold the value of the root password & robot password which in turn will be used by the StatefulSet for setting the ROOTPASSWORD & ROBOTPASSWORD environment variables in the containers (which in turn defines the application root password, finally).

The ConfigMaps

The Vtrin server configuration file is shared across all Pods in the Helm release concerning Ability History. The easiest way to realize this shared content is to create a ConfigMap with the configuration file and make all pods to mount it.

Let's create the ConfigMap:

kubectl create configmap vtrin-netserver-config --namespace $NS \

--from-file etc/Vtrin-NetServer.exe.config

The StatefulSet already mounts the configmap as a file in the Pods' storage namespaces.

Ability™ History is deployed using a Helm chart which also creates a ConfigMap in the cluster with the same name than then given Helm release name. This ConfigMap contains a few useful environment variables for operation purposes (see below) but also allows you to configure the amount of debugging information produced by the RTDB_ServiceManager process (enabled by making the RTDB_SERVICEMANAGER_DEBUG available in the system).

Actual Ability™ History deployment

You can parametrize your Ability™ History deployment by configuring the contents of the values.yaml file. Some relevant values are:

imageRegistry: the container image registry where you pull from.imageRepository: the container image name that you want to pull (ie. regular,devorwarmstorage).imageTag: the image version you want to pull. Images are tagged with the repository branch name they come from, an ordinal date abiding to the ISO8601 standard using the format stringYYYYMMDDand a build ordinal for the day.

For a basic deployment there is not need to set anything particular in helm/AbilityHistory/values.yaml.

Choose a convenient release name for the deployment and set to to the environment variable REL

cd helm

export REL=rel01

helm install "$REL" AbilityHistory -n "$NS"

Get External IP of Deployment

You can get the External IP using the below command and use it to connect to Ability™ History View from any browser

kubectl get svc -n "$NS"

Get Pods Status

Use the below command to get the pods status

kubectl get pods --namespace "$NS"

Operational considerations

The following environment variables exist in each Ability™ History running container to help you track down (within the container) what version you are using:

K8S_NS: Kubernetes namespace where the container is running.K8S_IMAGE_REGISTRY: Image registry where the container comes from.K8S_IMAGE_REPOSITORY: Image repository for the container.K8S_IMAGE_TAG: Image tag for the container.

Updated 7 months ago